The Big Push for AI Literacy Should Come With Optimism and Skepticism

And a few other questions about K-12 student learning and AI

If preparing the next generation for the AI economy is a matter of national interest—and it is—then this summer’s cascade of teacher-training announcements should give us cautious optimism. The feds, philanthropies, and for-profit groups have made a series of big announcements and written big checks. But without a national strategy, it’s unclear whether these investments will add up to real progress or scattershot experiments. Meanwhile, many teachers remain skeptical, wondering whether AI will actually help their students learn, or just add one more item to their to-do lists.

Here’s what’s been announced so far this year:

White House (April): An executive order launched an AI Education Task Force and a pledge, now signed by 100+ organizations, to provide AI training and resources for youth, parents, and teachers.

AFT (July): The American Federation of Teachers (AFT) announced a $23M partnership with Microsoft ($12.5M), OpenAI ($10M), and Anthropic ($500K) to develop a new AI “Academy.” The “Academy” promises to reach 400,000 educators by 2030, starting with a flagship campus in NYC and a focus on high-needs districts.

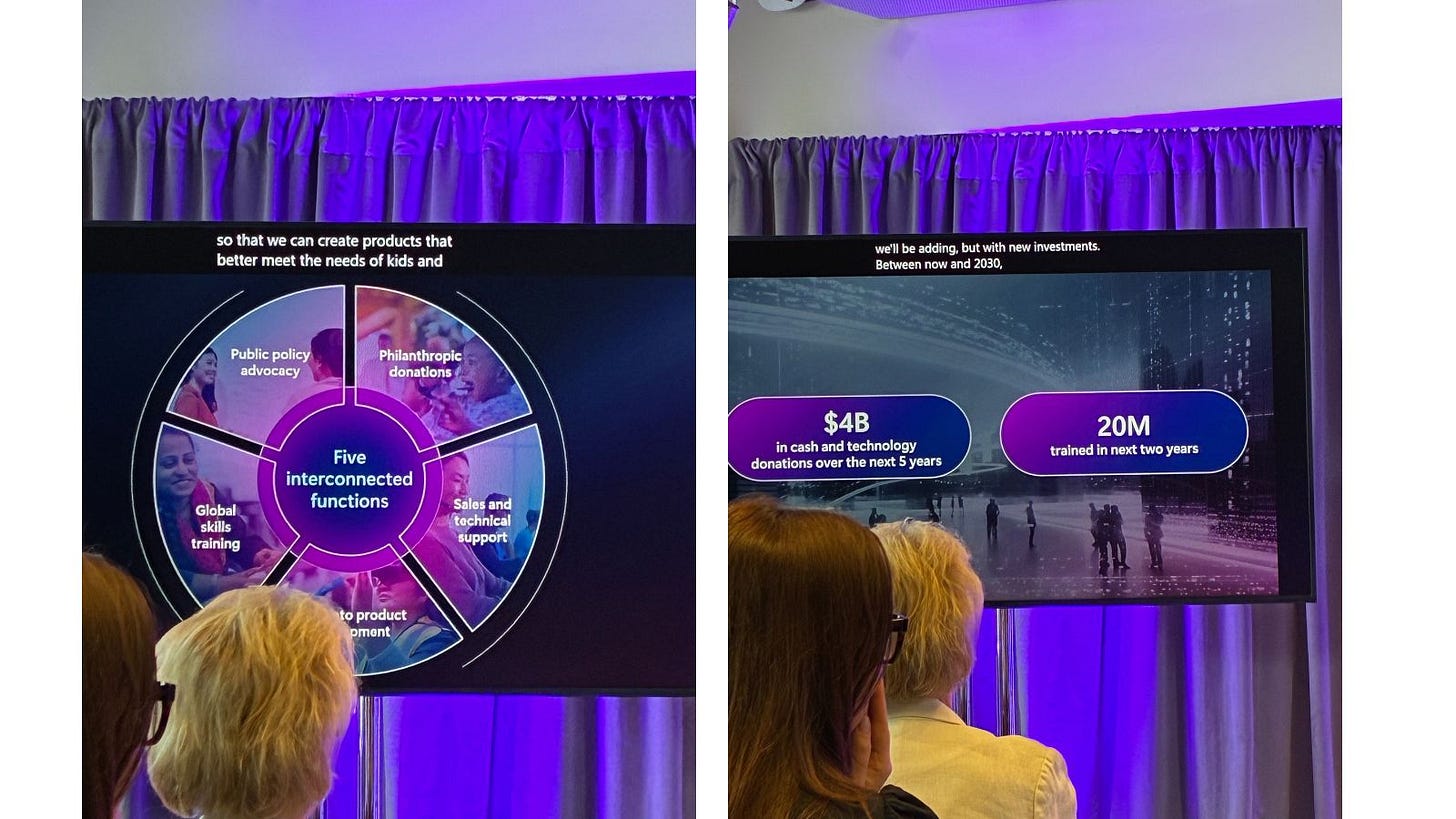

Microsoft (July): Days after AFT’s announcement, Microsoft unveiled a $4B initiative for AI training and research (which included the AFT grant). At the Seattle launch (which I attended), COO Brad Smith and Code.org’s Hadi Partovi highlighted programs like a new “Hour of AI” and a free high school AI course.

OpenAI (mid-July): OpenAI announced a $50M fund to support nonprofit and community organizations in education, health care, and workforce development at every stage of AI adoption. Details are thin.

Melania Trump’s Presidential AI Challenge (September 2025): A splashy nationwide contest for K-12 students and teachers to design AI solutions for community problems (finalists head to D.C. for the chance to win $10K prizes). A contest like this might spark genuine engagement with AI—or it might just reward the most polished PR pitches from already-advantaged communities, while leaving everyday classrooms untouched. EdWeek spoke with me and some other folks on this topic a little while ago.

These are big numbers, big names, and, at least on paper, big ambitions. But the details are fuzzy. Which of these are real, tangible strategies, and which are corporate smoke and mirrors?

That leads me to three big questions:

Are private actors pursuing their own agendas or aligning with public priorities? We now have billions of dollars in play, but no clear national plan. South Korea, Singapore, Estonia (see below), and other countries treat AI education as a pillar of global competitiveness by building national high school curricula, strategies to tackle teacher shortages, and equity-focused initiatives. Will we do the same in the U.S.?

Private capital moves fast, but it also shifts with markets and politics. And then there’s the elephant in the room: the teachers’ unions. Their involvement brings sensitivity to teacher concerns but also raises the possibility that protecting the status quo will outweigh reimagining schools. Local unions are starting to build language about AI into their contracts. At the same time, companies want to avoid controversy and protect market share. That makes it unlikely that these initiatives will explore the deeper, more disruptive possibilities like new school models or rethinking teacher roles.

Will teachers, principals, district leaders, families, and others be brought along? These initiatives don’t say much about involving principals, central offices, schools of education, state leaders, or families. And teachers are pretty jumpy about AI. District leaders have cut ties with outside trainers who oversold AI and dismissed teacher concerns. Without thoughtful stakeholder engagement, this isn’t about shifting a sector toward the future; it’s wishful thinking.

Will the concept of “AI literacy” move beyond the basics? We risk defining AI literacy too narrowly. Right now, many are asking questions like, “What is AI?” instead of,

“How can AI help improve educational outcomes?”

“What are the implications for what students need to know and be able to do?”

“How should we be preparing future educators?”

True AI literacy requires both optimism and skepticism. Teachers must be able to see the positive potential of AI while also understanding whether an AI product actually advances learning, how to use AI to personalize instruction, and how to safeguard students against data privacy breaches and potential manipulation by, for example, AI chatbots.

So here’s my challenge to those leading these efforts: If you want to ensure this isn’t just PR, bring researchers into the room. Fund and use rapid-cycle evaluations to test what works, share results transparently, and build alignment with public priorities. Most districts don’t have the funds or capacity to do that work, but without that, all the money and all the pledges may amount to little more than a summer of press releases.

Big investment in AI literacy are colliding this month with new research and bold experiments. Here are some things that are worth a read (or listen).

On the Need for Solutions

All this comes against the backdrop of CRPE’s newly released 2025 State of the American Student report. This year, we focused on understanding the math crisis: what’s behind it and how we can fix it. As a previous post of mine argued, AI is an integral part of the math equation, but not a silver bullet. The report underlines the stakes: if these new investments in teacher AI literacy don’t transform student experience by closing equity gaps and learning-loss chasms (see new Stanford research below), then we will have missed the mark.

On New Bold Experiments

Speaking of potentially transformational models, Alpha Schools has been on a media blitz as of late, and a couple of recent in-depth interviews are well worth some listening time.

Hard Fork’s interview with Alpha Schools’ co-founder MacKenzie Price gives a great overview of what makes the model unique and what an Alpha School day looks like.

Joe Liemandt’s (the principal of Alpha Schools) interview with Patrick O'Shaughnessy is a LONG but fascinating discussion of the backbone technology behind the “two-hour learning model” and plans for growth.

There are some really important reveals in these interviews, including the fact that Alpha has an incredibly ambitious scaling plan (which reminds me a bit of how fast WAYMO is scaling). Notably, that plan does not include public schools, focusing instead on homeschool and microschool students. The founders make no mention of equitable access or social change, just a straight-on competitive model for kids whom the school chooses to admit (e.g., gifted and talented) and whose parents can afford it.

I have not seen the schools myself (Price didn’t grant my request to visit), so I was eager to learn more about the learning model. The distinguishing aspects of the model seem to be less about innovative use of AI than about motivating and guiding students through engaging projects and about using adults as guides and motivators rather than instructors, something schools like Summit Public Schools and others have been doing for a while with much more diverse students populations.

I’m all for more exciting models and have nothing against Alpha Schools’ marketing plan. But where is the investment and incubation of new AI-powered schools that DO aim to serve less affluent and more complex learners at a similar scale? We’ll be taking on this question soon. Meanwhile, send me your thoughts!

On What Other Countries Are Doing

The UK’s Blair Institute, in collaboration with an official from Estonia, issued a compelling vision for AI readiness in UK schools, noting the risks of inaction: unequal access, poor use of AI in schools, loss of global competitiveness, etc. I loved this quote by the Minister of Education and Research of Estonia.

“In Estonia, we are taking a leap into the AI era in education by bringing artificial-intelligence tools into our classrooms this autumn. We are driven by the recognition of the risk of cognitive offloading, where students use AI to bypass learning rather than deepen their understanding. This requires a shift in pedagogical approaches towards emphasising problem-solving, critical thinking, collaborative work, and ethical reasoning.”

Will the U.S. approach to AI literacy and teaching be oriented around those principles? I am not so sure, given the fragmented nature and lack of detail around these initiatives.

On New Research, Commentary, and Resources to Help Connect the Dots

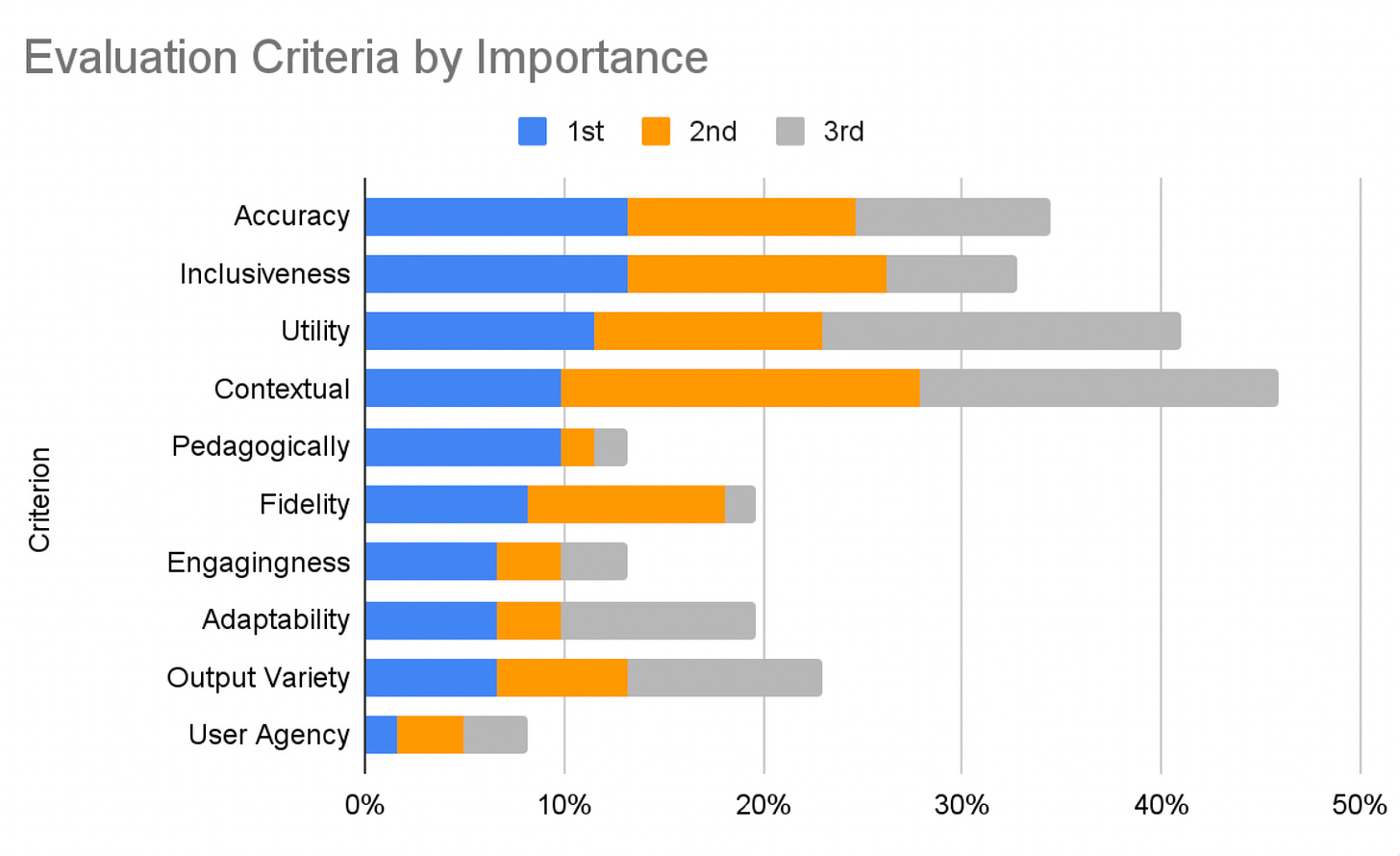

New research from Stanford examines teacher use of AI math tools. Findings were very much in line with CRPE’s recent research on teacher experimentation with AI:

Teachers valued many different criteria but placed the highest importance on accuracy, inclusiveness, and utility.

Teachers’ evaluation criteria revealed critical tensions in AI edtech tool design.

A collaborative approach helped teachers quickly arrive at nuanced criteria.

Another report on how workplaces are changing under AI, especially regarding the hollowing out of entry-level jobs, signals what schools should be preparing students for now, not “eventually.”

AI for Equity announced its new AI Innovation Index, an effort to collect data on school district AI adoption in three domains: 1) Student AI Agency, 2) Staff AI Empowerment, and 3) C-Suite Condition-Setting. CRPE is helping with this, and we’re excited about the potential to learn from this field scan.

“3 questions for K–12 leaders amid the AI tutoring boom”—a quick litmus test for whether schools are prepared to receive tangible benefits (not just hype) from AI tutors.

Three related resources on AI and youth well-being: This recent Senate hearing on AI chatbots and kids is worth a watch (heartbreaking testimony from parents). This piece on AI “therapists” and teen mental health digs into the emerging evidence. Alex Koltran of aiEDU has a new podcast series for parents: Raising Kids in the Age of AI.

Why All This Matters Together

There may be a bit of a constellation forming here: policy and investments are moving forward, schools are experimenting, data is confirming that student learning (especially in math) is deeply unsettled, and AI is both part of the hope and a possible risk for young people. Together, all of these factors help us see what’s at stake—not just in terms of what’s possible, but what’s necessary if we want to avoid compounding existing inequities, learning gaps, and pressures on young people.

Final Words

Last week was an emotional one for many, with the rapidly accelerating divisiveness and violence in this country. I was moved by this wise post from my friend Babak Mostaghimi, and I’m sharing it with his permission.

Just a friendly reminder from a guy who spends a LOT of time learning about AI-powered algorithms and what they are designed to do.

Your Facebook/Instagram/BlueSky/Truth Social/X/insert social media platform, news sources, and other things guided by algorithms are primed to share content with you that gets you emotionally riled up and your eyeballs locked on a screen that can serve you advertisements and make them money. They also help to serve bad actors who are trying to influence how we relate to or hate one another.

It will serve up images that are similar to ones you've liked or even paused on previously to make that connection. It will serve up language that seems to activate you from your text messages and private messages…so if you're finding yourself increasingly worked up, starting to dehumanize your fellow countrymen … remind yourself that someone benefits by getting you worked up and focused on very specific things at a detriment to your kindness and love for the world.

The world only gets better when we are kind to one another, when we pour into our relationships and communities, and when we turn down the temperature knob that extreme elements keep trying to turn up in order to spread chaos and destruction in our lives.

And if you wonder why this matters, just look to any country where there is or has been civil war or genocide. These are places where neighbors and families were turned against themselves and each other and extreme destruction has occurred.

Please take care of yourself and each other, get off the screens, go hug someone, and be kind because those are the things that make our world better.

Thanks to June Han, Dan Silver, and Bree Dusseault for tips and contributions to this post.